(SWM) ACI Topology and Hardware part 2

29 Mar 2020R1#

Onward and upwards! This is Part deux of the “ACI Topology and Hardware” section, another Study-With-Me post - and if you’re interested, check out Part 1.

This section will cover the Hardware that makes up the ACI Fabric. There are many details to hardware so I’m hoping my coverage is enough for now. The blueprint is not very descriptive as to what Cisco wants an engineer to know.

DISCLAIMER: The hardware models mentioned in this post will change as hardware is always going through iterations so the data in this writing is accurate as of March 2020.

Before I get started I want to share a link I find useful almost every week for work: The Cisco N9k “Compare Models” page. I use this page any time I need to check whether a switch supports NXOS or ACI or verifying port speeds, etc.

Study time:

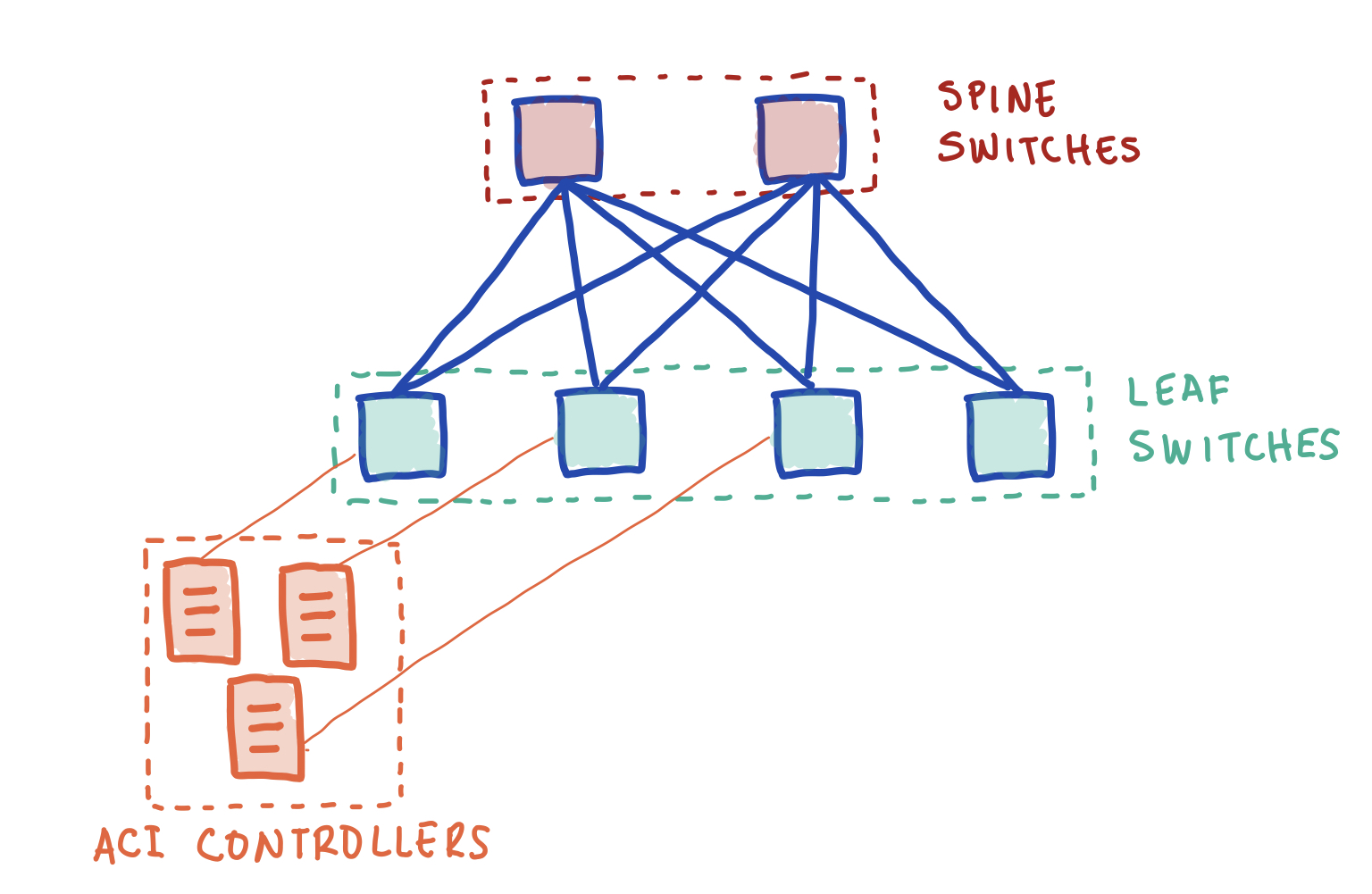

What are the main components of Cisco ACI?

- APIC (aka ACI Controllers, controllers)

- Spine

- Leaf

What hardware supports APIC?

- The 3 nodes cluster (min. cluster size) runs on Cisco rackmount servers.

- UCS 220 (M3/M4 gen) or Cisco APIC servers.

- Image is installed on the server by Cisco.

- TPM secured servers.

- Product ID determines processor, memory, and storage capacity of each node in the cluster.

- Ex. APIC-CLUSTER-M3 for Medium sized nodes or APIC-CLUSTER-L3 for Large sized nodes.

- See product specifications for details on the APIC size.

- Although Cisco offers a virtualized image for APIC, it’s not supported in the enterprise.

- Useful for lab and POCs.

- The Cisco Cloud APIC is Cisco’s virtual image to support endpoint learning and policy management across on-premises and public cloud providers.

- Seems to require Multi-Site Orchestrator.

- Although it’s not part of the blueprint, I’d be interested to learn more from those who have deployed Cloud APIC. HMU.

How many nodes are needed in a APIC cluster?

- Cluster size supports scalability of the fabric.

- Minimum 3 nodes.

- Supports up to 80 LEAF switches.

- 6 SPINEs

- 1000 Tenants

- 1000 VRFs

- 4 nodes.

- Up to 200 LEAF switches

- Same as 3-node for other specs.

- 5-7 nodes

- 3000 Tenants

- 3000 VRFs

- Same as 4-node for other specs.

What hardware supports LEAF/SPINE switches?

- ACI is supported by the N9k switch family.

- Not all N9k can be dedicated for the LEAF or SPINE roles.

What 2 lines of Cisco switches support ACI?

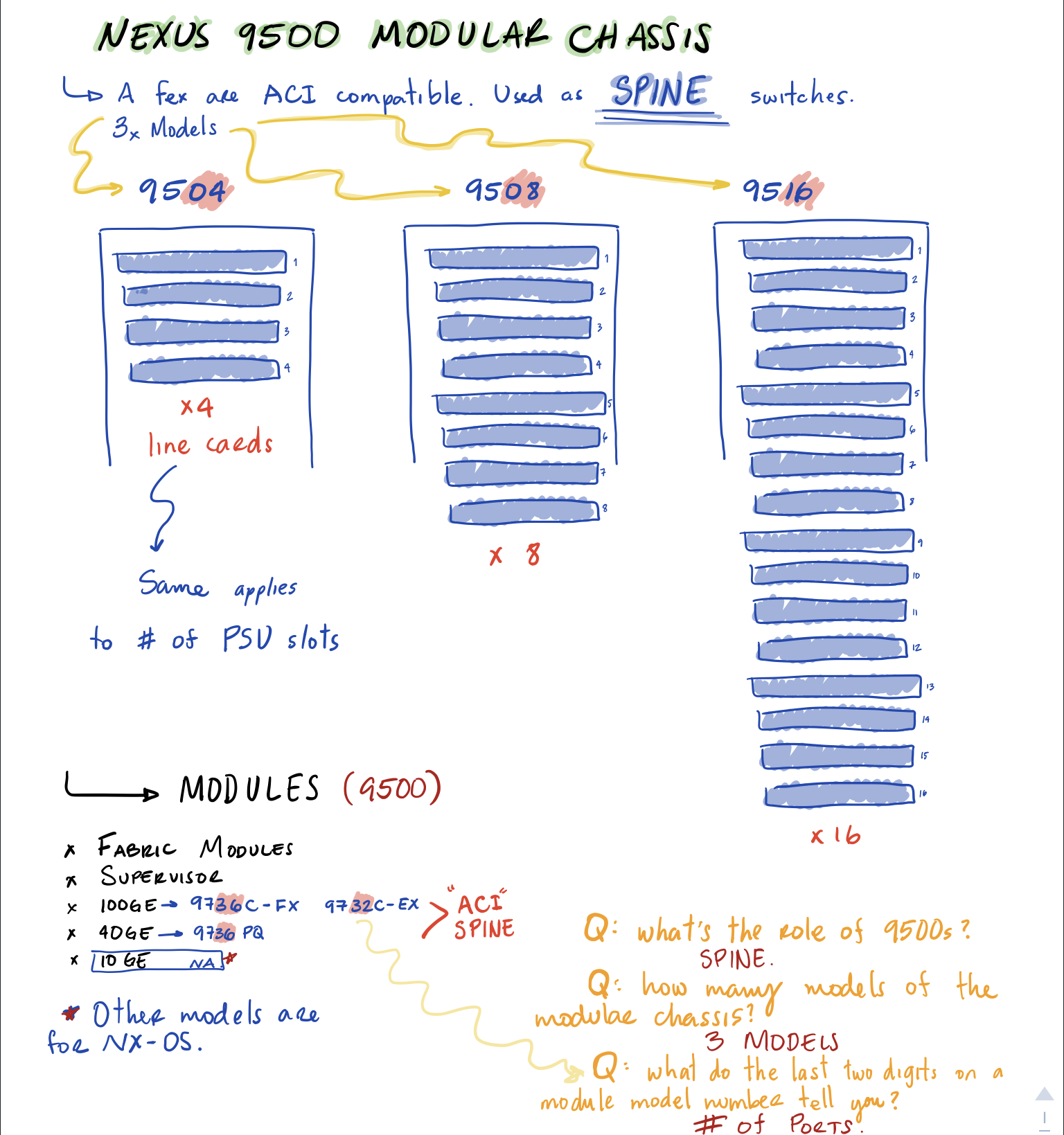

- 9500s (Modular switches).

- The 9732/9736 module cards are considered part of the 9500 family.

- 9300s (Fixed form-factor switches).

Do all N9k support ACI?

- No. Some N9k are NXOS only. But I will only focus on those that support ACI at this time.

What switches support SPINE role?

- For Fixed form-factor only the 40G/100G/400G switches support the SPINE role. Makes sense, since these are your aggregation layer, you want all the bandwidth.

- For Modular form-factor, both 40G/100G modules cards support it.

- 9736-FX and 9732-EX for 100G

- 9736PQ for 40G

- The modular chassis support multiple line card slots (4, 8, 16 slots).

- provides a flexible architecture.

- easy to scale up for increased LEAF support.

What switches support the LEAF role?

- A LEAF is a TOR switch and those are only supported by the Fixed form-factor N9k.

- The 100M/1G/10G/25G switches support it. Makes sense, most endpoints have those ports today.

- The new Cloud Scale ASICs now allow support for 40G/100G switches as LEAFs for those who need it. Looking at you Palo Alto FWs with 100G ports.

- Same ASIC allows LEAF to have the 400G models but it’s 100G down to the access and 400G uplinks (93600CD-GX).

- Supported only on ACI 4.2 and higher.

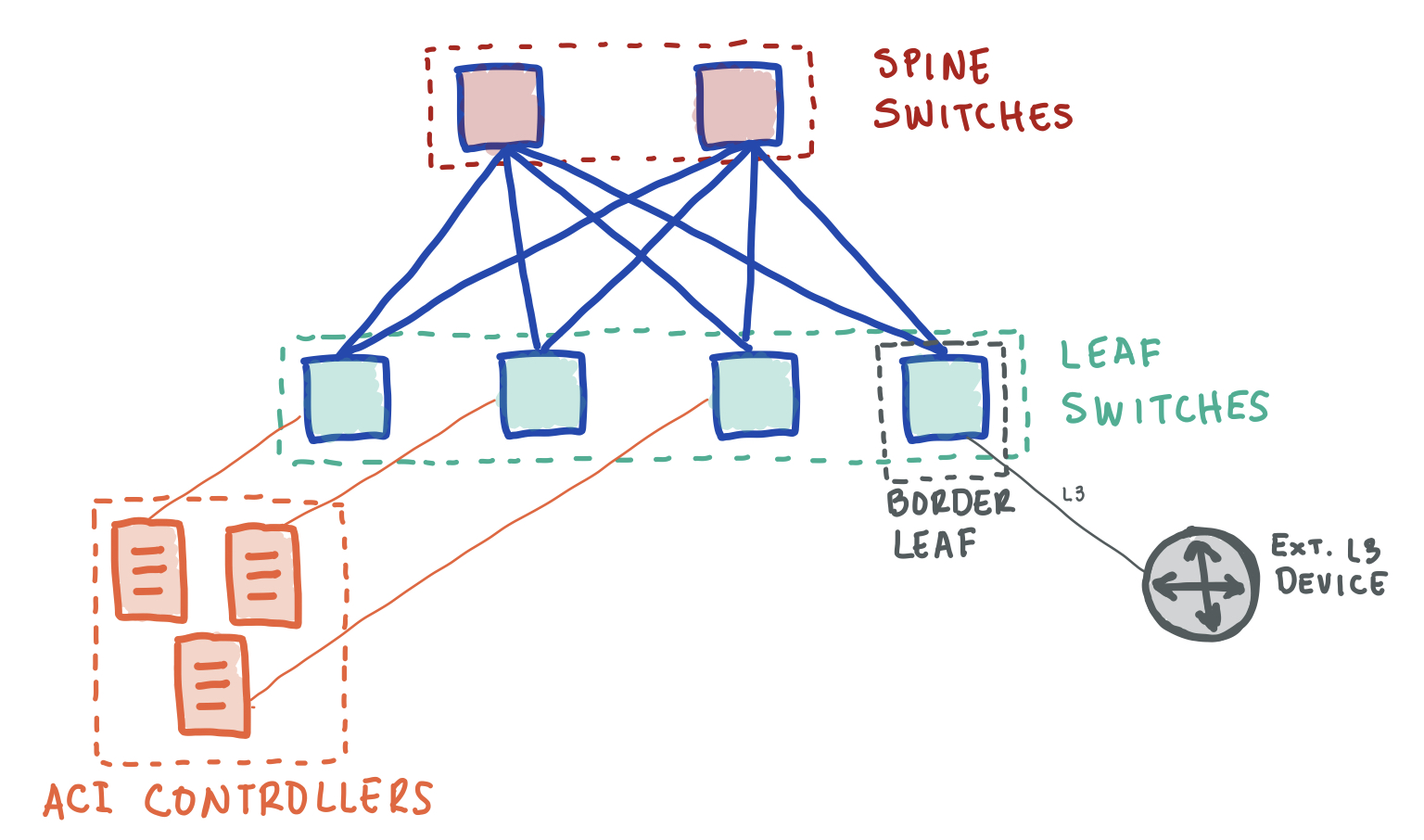

What is an ACI Border LEAF?

- This is a Leaf switch that provides connectivity to an external network (external to the fabric).

- Ex. connecting a router to the fabric.

- Supports connectivity via SVI, Routed Interfaces, Routed Sub-Interfaces.

- See Part 1’s external connectivity question for protocol support.

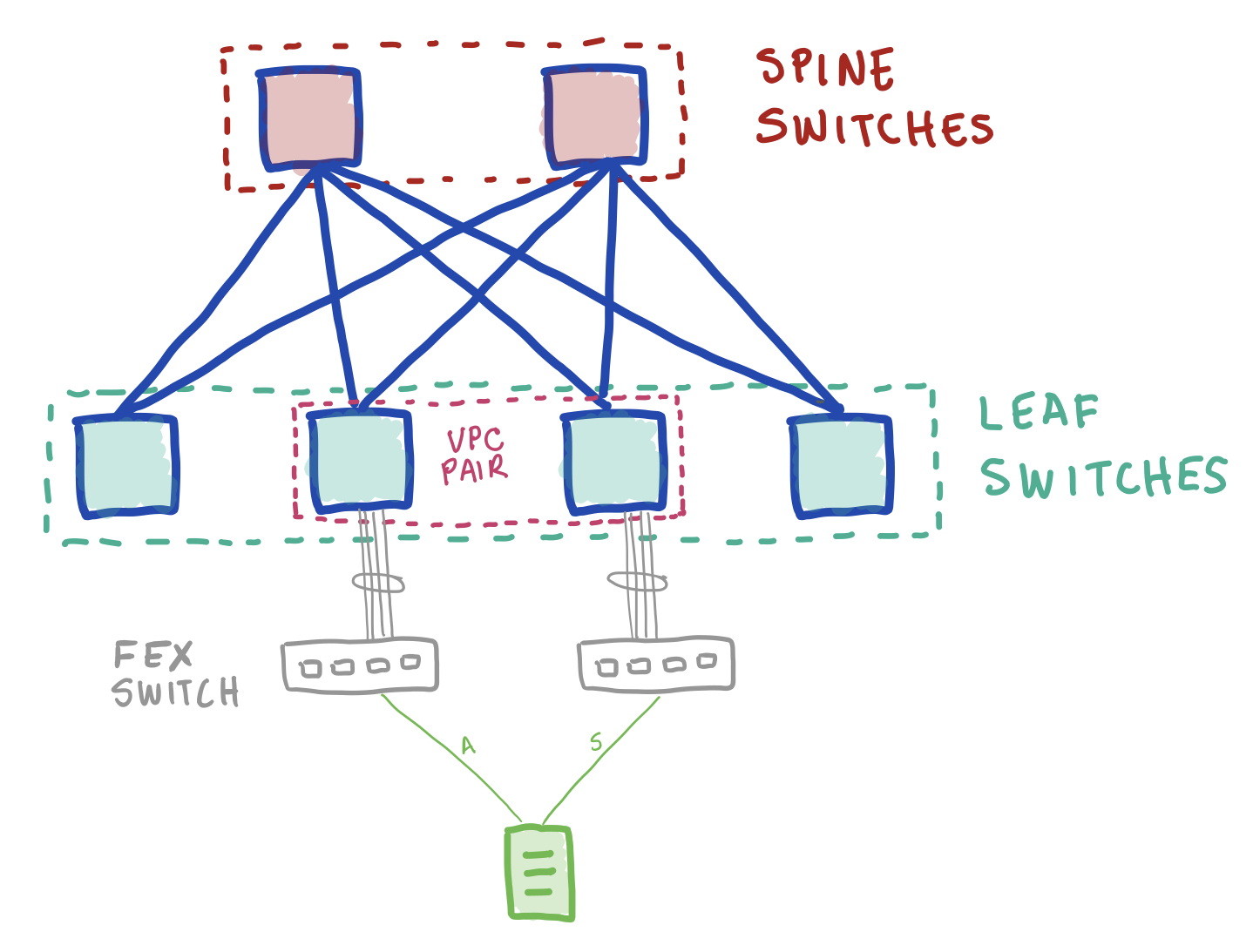

Is there FEX support in ACI?

- Yes, 40G uplinks.

- 10G Breakout cables supported.

- It can only be connected to a LEAF switch.

- straight-through port-channel from LEAF1 to FEX1

- straight-through port-channel from LEAF2 to FEX1

- vPC from FEX1 & FEX2 to host.

Server could be connected with link aggregation. For some reason I drew an active standby topology.

Server could be connected with link aggregation. For some reason I drew an active standby topology.

Resources

- ACI Design Guide White Paper

- Cisco Application Policy Infrastructure Controller Data Sheet

- Cisco Application Centric Infrastructure Fabric Hardware Installation Guide

- Verified Scalability Guide for Cisco APIC - ACI 4.x

- CiscoLive Presentation - Cisco Nexus 9000 Architecture - BRKDCN-3222 - Start at 1:06:17 for N9k platform, slides 43-67.

- Clos (Spine & Leaf) Architecture – Overview

Glossary:

APIC: Application Policy Infrastructure Controller

TPM: Trusted Platform Module chip for RSA encryption.

N9k: Nexus 9000 models

ASIC: Application-specific integrated circuit

Final Thoughts

This was a very good session. I think the CiscoLive presentation will require its own review in the future, there’s a lot to unload there.

I didn’t cover hardware like the Super Spines or the Remote Leafs but I hope to do so in the future.

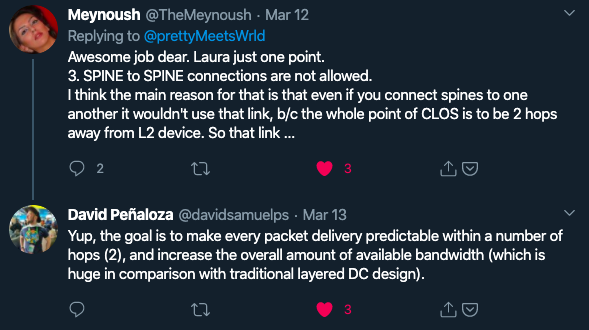

Finally, I’d like to give a shout out to Meynoush and my friend David for some really good feedback on my last post.

As always, all feedback is welcome.

If you liked this post, you can share it with your followers or follow me on Twitter!cisco dcaci dcaci1 dcaci1.1 study-with-me